Floating-point operations per second, or flops, is a theoretical measurement used when describing the performance capability of hardware. The term “teraflops,” or “trillion flops,” is often used around recent gaming consoles such as the PlayStation 5 and the Xbox Series X, but it’s important to note that it applies to any device that contains a computing chip. The issue, however, is that measuring in teraflops has never reliably indicated real-world performance.

This can be observed especially in the graphics card market. During Q2 of 2019, AMD released the RX 5700 XT, a direct competitor to NVIDIA’s GTX 1080 Ti. While the 5700 XT had a teraflops rating of around 9.8, the 1080 Ti still boasted a stronger rating of around 11.3. And upon comparing the two values, we’ll find that the 1080 Ti should be about 15.3% faster than the 5700 XT.

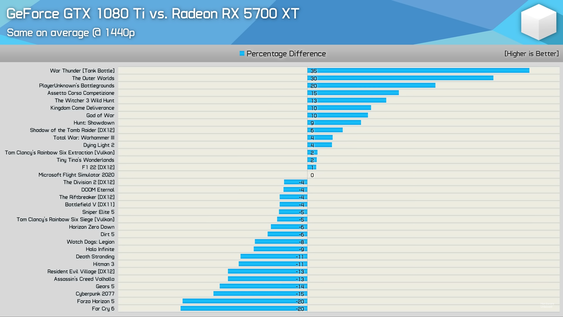

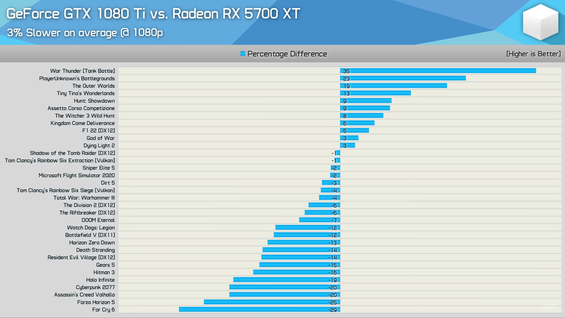

Well, let’s take a look at some real-world testing results:

It appears that “15.3% faster” was in fact not the case. Represented above, Hardware Unboxed tested both card models, and found that on average, the 1080 Ti was the same speed as the 5700 XT at 1440p resolution. It was even 3% slower at 1080p.[1]

And no, this comparison is not an anomaly. The RTX 3080 almost triples the TFLOPS rating of the RTX 2080 (29.8 to 10.7), but is only about 65% faster at 4K resolution.

These performance statistics only begin to question a teraflops rating’s credibility in measuring computational performance; in order to further dispute it, we must better understand what actually defines “teraflops.”

The value of floating-point operations per second is calculated by taking the clock speed, the number of cores, and the number of floating point operations per cycle, and then multiplying them together. For the purpose of converting from flops to teraflops, this product is divided by one trillion.

The expression here might seem too simple; and as a matter of fact, it is. What part of this expression considers the processor’s memory or bus width? And after one year of video driver updates, the 5700 XT’s performance increased by ~6%[1]; so where is the driver considered? These are only a few of so many variables that this expression is missing; so while this expression is representative of floating-point operations, it is not indicative of real world performance, and thus dooms a measurement of teraflops to be flawed.

Let’s say you multiplied your CPU’s core count by 1000, and you maintained all of the other variables at constant values. Per the expression, the TFLOPS rating would multiply by at least 1000. The issue, here, is that you’re going to need much more cache to support so many cores. Cache, meanwhile, isn’t even considered in the above expression; so even though the card would be theoretically 1000 times more powerful, it would be hindered by its painfully disproportionate cache size.

So how can you accurately measure a processor’s performance? Well, we need to look at performance metrics that can actually be verified with testing. These include framerates and temperatures, which can be observed via benchmarking, and latency, which can be examined with an LDAT. Metrics such as these which are observed in the real world are significantly more reliable and indicative of computational performance. Theoretical values such as teraflops are unreliable because they have never been tested.

Sources:

[1] https://www.youtube.com/watch?v=1w9ZTmj_zX4&ab_channel=HardwareUnboxed\

[2] https://www.youtube.com/watch?v=OCfKrX15TOk&ab_channel=JayzTwoCents[3] https://www.tomsguide.com/news/ps5-and-xbox-series-x-teraflops-what-this-key-spec-means-for-you